Uniform usampler2D myUnsignedIntegerTexture

Then, to sample from the integer texture in a shader, you need the EXT_gpu_shader4 extension Again, check the internal format to see how OpenGL Hint an internal type of LUMINANCE16UI_EXT.

Here, you pass in the data as LUMINANCE_INTEGER, UNSIGNED_SHORT, and If you want it back in the range 0-65535, then do:Īs for LUMINANCE_INTEGER_EXT, this is part of the EXT_texture_integer extension, which is only supported Here, the samper2D “myTexture” is converting the 16 bit luminance pixel into 32 bit float RGBA.Īssuming the texture was stored as LUMINANCE16, you haven’t lost any data, it has just beenĬonverted to float in the range, and splatted to RGBA according to the spec: (L, L, L, 1). Vec4 color = texture2D(myTexture, vTexCoord) Now that your texture is on the GPU, you sample from it: Go buy new hardware, or tell your vendor to fix their driver.

#Opengl 32 bit float texture driver#

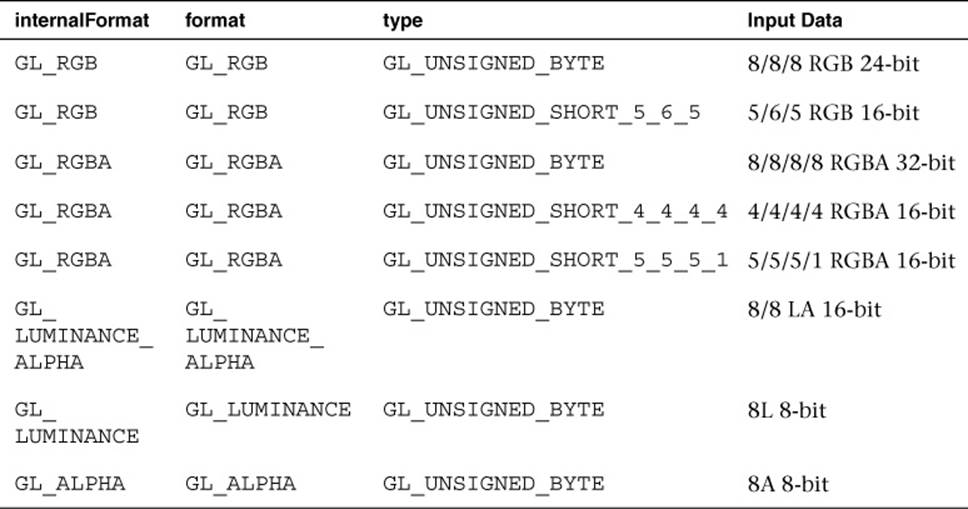

Something else, like LUMINANCE8, your driver or hardware doesn’t support what you’re trying If the internalformat comes back as LUMINANCE16, you’re in good shape. GetTexLevelParameteriv(target, level, TEXTURE_INTERNAL_FORMAT, &actual_format) Note, this is only a hint- some hardware doesn’t supportĪfter uploading your texture, take a look at how OpenGL actually stored it: Specifically, the format, type of the data you pass in should be LUMINANCE, UNSIGNED_SHORT.Īnd the internalformat should be LUMINANCE16, to hint to OpenGL that you want it to store TexImage2D(target, level, internalformat, width, height, border, format, type, pixels) Look at the arguments you use when you give OpenGL your image: Did you read the instructions? Everything you need to know is documented in

0 kommentar(er)

0 kommentar(er)